Meta AI has recently unveiled its groundbreaking Segment Anything Model (SAM), a powerful AI-based computer vision system designed to effortlessly “cut out” any object from any image with a single click. SAM’s zero-shot generalization capability allows it to segment unfamiliar objects and images without the need for additional training, making it an indispensable tool for various applications such as image editing, 3D modeling, and augmented reality.

Key Features of SAM

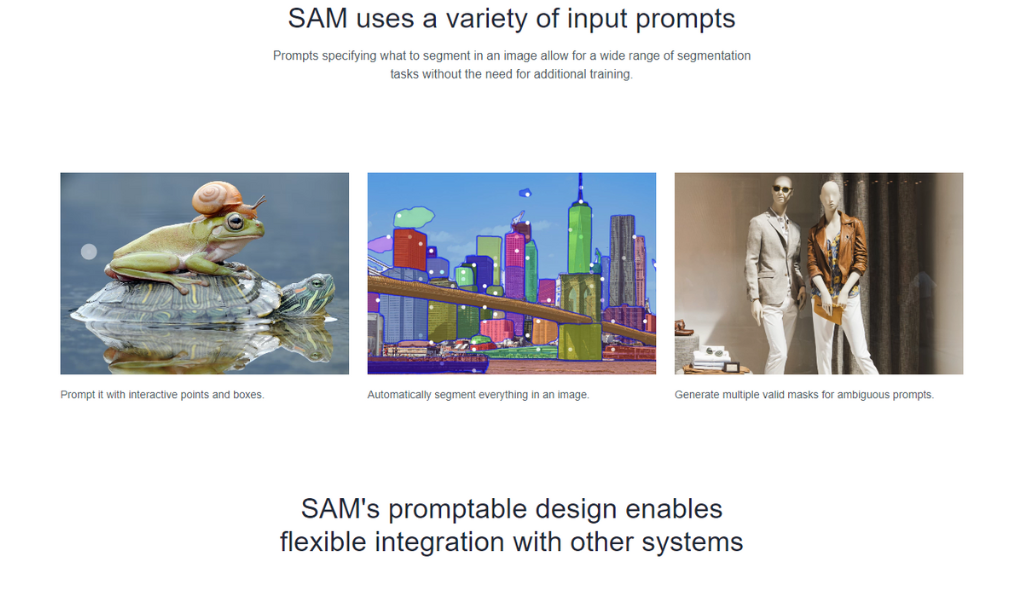

- Promptable Segmentation System: SAM uses a variety of input prompts to specify what to segment in an image, allowing for a broad range of segmentation tasks without requiring extra training. Users can prompt SAM with interactive points, bounding boxes, or even segment everything in an image.

- Flexible Integration: SAM can take input prompts from other systems, such as using a user’s gaze from an AR/VR headset to select an object or bounding box prompts from an object detector to enable text-to-object segmentation.

- Extensible Outputs: SAM’s output masks can be used as inputs to other AI systems. These masks can be applied to tasks such as object tracking in videos, image editing applications, 3D modeling, or creative tasks like collaging.

- Zero-shot Generalization: SAM has learned a general notion of what objects are, enabling it to generalize to unfamiliar objects and images without needing additional training.

Training and Dataset of SAM

SAM’s remarkable capabilities stem from its training on millions of images and masks collected using a model-in-the-loop “data engine.” Researchers used SAM and its data to interactively annotate images and update the model, repeating this cycle numerous times to refine both the model and the dataset. The final dataset comprises more than 1.1 billion segmentation masks collected on approximately 11 million licensed and privacy-preserving images.

Efficient and Flexible Model Design

Designed for efficiency, SAM is composed of a one-time image encoder and a lightweight mask decoder that can operate in a web browser in just a few milliseconds per prompt. This efficient design allows SAM to power its data engine without compromising on performance.

Acknowledgements

The research and development of SAM were made possible by a dedicated team of researchers, project leads, and contributors from Meta AI.

- Supported prompts: interactive points, bounding boxes, and automatic segmentation

- Model structure: one-time image encoder and lightweight mask decoder

- Platforms: works on various platforms and can run in a web browser

- Model size: not specified

- Inference time: a few milliseconds per prompt

- Training data: 11 million images and 1.1 billion segmentation masks

- Training time: not specified

- Mask labels: not specified

- Video support: not specified

- Code availability: GitHub

Conclusion

Meta AI’s Segment Anything Model (SAM) is a revolutionary AI computer vision system with the potential to transform various industries, from image editing to augmented reality. Its promptable segmentation system, flexible integration, extensible outputs, and zero-shot generalization make it an invaluable tool for users and developers alike.